Introduction: The Memory Challenge in Modern AI

The rapid evolution of Artificial Intelligence, particularly with the advent of sophisticated Large Language Models (LLMs), has brought unprecedented capabilities but also significant challenges. As AI systems become more complex and data-hungry, their ability to "remember," understand context, and retrieve relevant information efficiently becomes paramount. Traditional data storage and retrieval mechanisms, designed for exact keyword matches and structured data, often fall short, leading to inconsistent results, scalability bottlenecks, and ballooning operational costs.

This is where the revolutionary power of embeddings and vector databases comes into play. They are not merely incremental improvements but foundational shifts that enable AI to move beyond rote memorization to true semantic understanding. This definitive guide will demystify how these technologies power intelligent retrieval and scalable performance. We'll embark on a journey from the foundational concepts of vector embeddings to the intricate architectures of vector databases, explore practical applications like semantic search and Retrieval Augmented Generation (RAG), diagnose common data challenges, and unveil expert optimization strategies to build more efficient, cost-effective, and truly intelligent AI systems.

The Foundation of AI Memory: Embeddings and Vector Representation

At the heart of modern AI's ability to "remember" and understand lies a concept called embeddings. These are not traditional data records but rather numerical representations that capture the essence, meaning, and relationships of complex data. By transforming text, images, audio, and other qualitative data into a quantitative format, embeddings allow AI to process and reason about information in a way that mimics human understanding of context and similarity.

Paul Pajo, a researcher affiliated with De La Salle-College of Saint Benilde, highlights that "Vector embeddings, numerical representations of complex data such as text, images, and audio, have become foundational in machine learning by encoding semantic relationships in high-dimensional spaces" [1]. This encoding of semantic relationships is what truly extends the reach of AI models, as recognized by Microsoft's .NET documentation on embeddings [3].

What Are Embeddings? The Language of AI

Embeddings are dense vector representations of data. Imagine taking a word, a sentence, an entire document, an image, or a piece of audio, and transforming it into a list of numbers – a vector. This vector is not random; it's carefully constructed such that the numerical relationships between these vectors reflect the semantic and contextual relationships of the original data.

Key Characteristics of Embeddings:

- Dimensionality: Typically range from 100 to thousands of dimensions

- Density: Most values are non-zero (unlike sparse representations)

- Semantic encoding: Similar concepts have similar vector representations

- Mathematical operations: Enable arithmetic operations on concepts

Practical Example: Word Embeddings

import numpy as np

from sklearn.metrics.pairwise import cosine_similarity

# Simplified example of word embeddings (real embeddings have hundreds of dimensions)

embeddings = {

"king": np.array([0.8, 0.2, 0.1]),

"queen": np.array([0.75, 0.25, 0.15]),

"man": np.array([0.7, 0.3, 0.2]),

"woman": np.array([0.65, 0.35, 0.25]),

"apple": np.array([0.1, 0.9, 0.1]),

"orange": np.array([0.15, 0.85, 0.05])

}

# Semantic relationships preserved in vector space

king_vector = embeddings["king"]

man_vector = embeddings["man"]

woman_vector = embeddings["woman"]

# The famous analogy: king - man + woman ≈ queen

result_vector = king_vector - man_vector + woman_vector

# Find closest embedding to the result

similarities = {}

for word, vector in embeddings.items():

similarity = cosine_similarity(result_vector.reshape(1, -1), vector.reshape(1, -1))[0][0]

similarities[word] = similarity

most_similar = max(similarities, key=similarities.get)

print(f"king - man + woman ≈ {most_similar} (similarity: {similarities[most_similar]:.3f})")How Embeddings Capture Semantic Meaning and Context

The magic of embeddings lies in their ability to capture semantic meaning and context through sophisticated training processes:

Training Process:

- Context window analysis: Models analyze words in context

- Prediction tasks: Learn to predict missing words or next words

- Dimensionality reduction: Capture meaning in lower-dimensional space

- Relationship preservation: Maintain semantic relationships mathematically

Embedding Types and Their Applications:

The Role of Embeddings in AI's 'Memory' Simulation

AI doesn't possess true biological memory but simulates it through embedding-based retrieval:

- Information encoding: Convert experiences to embeddings

- Storage: Save embeddings in vector databases

- Retrieval: Find similar embeddings when needed

- Context reconstruction: Use retrieved embeddings to inform responses

This mechanism enables various AI capabilities:

- Conversational memory: Remembering past interactions

- Knowledge retrieval: Accessing relevant information

- Context awareness: Maintaining conversation context

- Personalization: Remembering user preferences

Vector Databases: Architecture, Scalability, and High-Performance Storage for AI

While embeddings provide the "language" for AI memory, vector databases provide the "brain" – the specialized infrastructure for storing, indexing, and efficiently querying these high-dimensional numerical representations.

Why Traditional Databases Fail AI's Memory Needs

Traditional databases face fundamental limitations with vector data:

- Brute-force search required: No native support for similarity search

- High-dimensional inefficiency: Poor performance with 100+ dimensions

- Scalability limitations: Struggle with billions of vectors

- Specialized indexing lacking: No optimized index structures for vectors

Performance Comparison:

# Traditional SQL approach (conceptual)

def sql_similarity_search(query_vector, table_name):

# Would require comparing against every row

results = []

for row in database_table:

similarity = calculate_similarity(query_vector, row['embedding'])

results.append((row['id'], similarity))

return sorted(results, key=lambda x: x[1], reverse=True)[:10]

# Vector database approach

def vector_db_search(query_vector, index_name):

# Uses specialized indexing for efficient search

return vector_index.query(query_vector, k=10)Semantic Search and Retrieval Augmented Generation (RAG) with Vectors

The combination of embeddings and vector databases enables revolutionary applications in semantic search and RAG systems.

Beyond Keywords: The Power of Semantic Search

Traditional keyword search vs. semantic search:

Keyword Search Limitations:

- Exact match required

- No understanding of synonyms

- Misses contextual meaning

- Poor handling of ambiguity

Semantic Search Advantages:

- Understands intent and meaning

- Handles synonyms and related concepts

- Context-aware results

- Natural language understanding

Implementation Example:

class SemanticSearchEngine:

def __init__(self, embedding_model, vector_db):

self.embedding_model = embedding_model

self.vector_db = vector_db

self.cache = {} # Query cache for performance

def search(self, query, filters=None, top_k=10):

# Check cache first

cache_key = f"{query}_{str(filters)}"if cache_key in self.cache:

return self.cache[cache_key]

# Generate query embedding

query_embedding = self.embedding_model.encode(query)

# Perform vector search

results = self.vector_db.query(

query_embedding,

k=top_k * 2, # Get extra for filtering

filters=filters

)

# Re-rank results

ranked_results = self.rerank_results(query, results)

# Cache results

self.cache[cache_key] = ranked_results[:top_k]

return ranked_results[:top_k]

def rerank_results(self, query, results):

# Advanced re-ranking considering multiple factors

reranked = []

for result in results:

score = self.calculate_relevance_score(query, result)

reranked.append((result, score))

return sorted(reranked, key=lambda x: x[1], reverse=True)

def calculate_relevance_score(self, query, result):

# Multi-factor relevance scoring

semantic_similarity = result['similarity_score']

freshness = self.calculate_freshness_score(result['timestamp'])

popularity = self.calculate_popularity_score(result['view_count'])

authority = self.calculate_authority_score(result['source_quality'])

return (

0.6 * semantic_similarity +

0.2 * freshness +

0.1 * popularity +

0.1 * authority

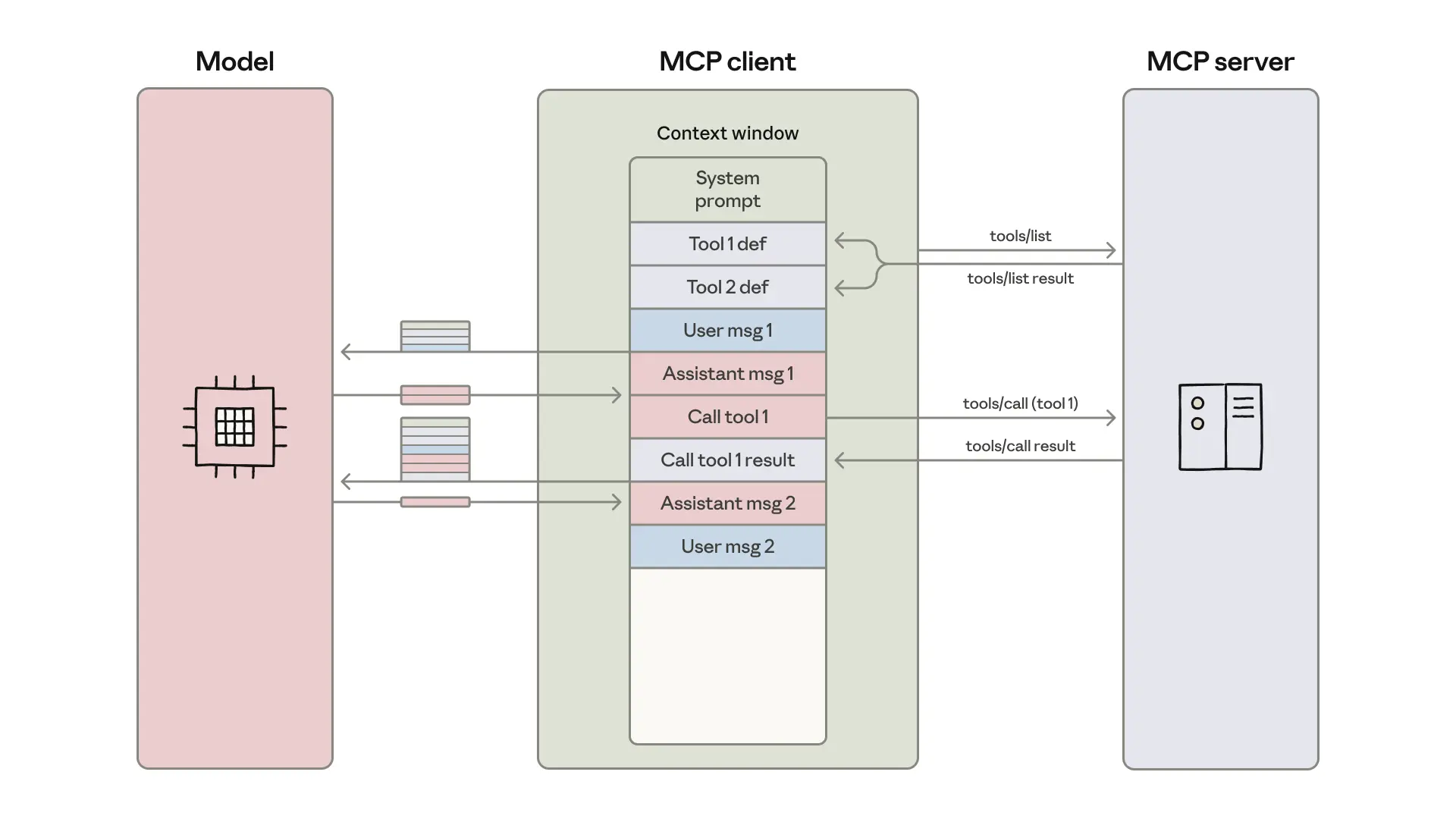

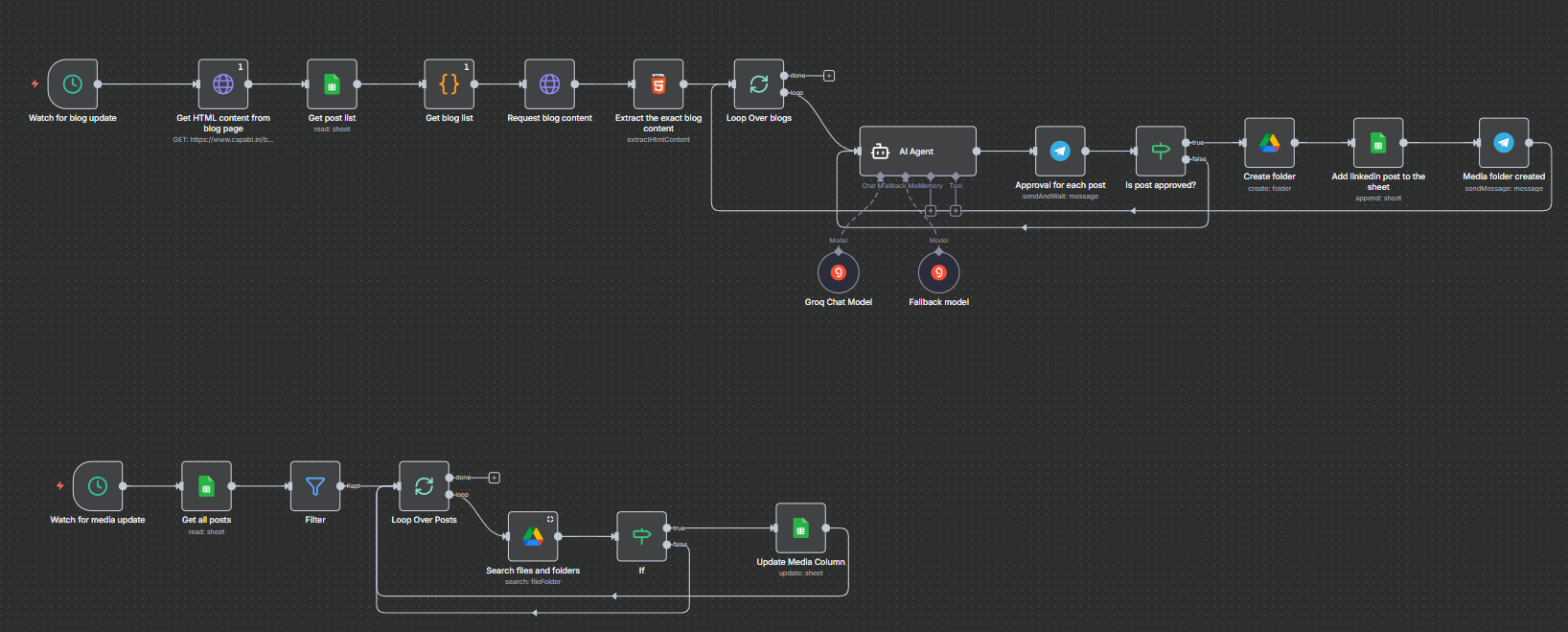

)Vector Search in Retrieval Augmented Generation (RAG) Systems

RAG architecture combines retrieval and generation:

RAG Workflow:

- Query processing: Understand user question

- Vector retrieval: Find relevant context

- Context augmentation: Combine with query

- Response generation: Create informed response

Advanced RAG Implementation:

class AdvancedRAGSystem:

def __init__(self, llm, embedding_model, vector_db):

self.llm = llm

self.embedding_model = embedding_model

self.vector_db = vector_db

self.query_analyzer = QueryAnalyzer()

self.response_evaluator = ResponseEvaluator()

def generate_response(self, query, conversation_history=None):

# Analyze query intent and requirements

query_analysis = self.query_analyzer.analyze(query)

# Generate query embedding

query_embedding = self.embedding_model.encode(query)

# Retrieve relevant context

context = self.retrieve_context(

query_embedding,

query_analysis,

conversation_history

)

# Generate response

response = self.llm.generate(

query=query,

context=context,

history=conversation_history

)

# Evaluate response quality

evaluation = self.response_evaluator.evaluate(

query=query,

response=response,

context=context

)

# If response quality is low, try alternative strategies

if evaluation['confidence'] < 0.7:

response = self.handle_low_confidence(

query, context, response, evaluation

)

return response, context, evaluation

def retrieve_context(self, query_embedding, query_analysis, history):

# Multi-strategy retrieval

strategies = [

self.vector_db.query(query_embedding, k=5),

self.get_temporal_context(query_analysis),

self.get_conversation_context(history),

self.get_entity_based_context(query_analysis['entities'])

]

# Combine and deduplicate context

combined_context = self.combine_contexts(strategies)

return self.rerank_context(combined_context, query_analysis)

def handle_low_confidence(self, query, context, response, evaluation):

# Fallback strategies for poor responses

strategies = [

self.try_query_expansion(query),

self.try_alternative_retrieval(query),

self.try_different_generation_parameters(),

self.escalate_to_human_agent(query, response)

]

for strategy in strategies:

new_response = strategy.execute()

new_evaluation = self.response_evaluator.evaluate(

query=query,

response=new_response,

context=context

)

if new_evaluation['confidence'] > 0.7:

return new_response

return response # Return best availableChoosing Similarity Metrics: Cosine, Euclidean, and Beyond

Metric Selection Guidelines:

def select_similarity_metric(data_type, use_case, normalization=True):

"""

Intelligent metric selection based on data characteristics

"""

metrics = {

'text': {

'semantic_search': 'cosine',

'clustering': 'cosine',

'classification': 'cosine'

},

'image': {

'similarity': 'cosine',

'clustering': 'euclidean',

'anomaly_detection': 'euclidean'

},

'audio': {

'similarity': 'cosine',

'clustering': 'euclidean'

}

}

base_metric = metrics[data_type][use_case]

if normalization and base_metric == 'cosine':

return 'cosine'

elif normalization:

return 'normalized_euclidean'

else:

return base_metric

def optimize_metric_parameters(metric, data_characteristics):

"""

Optimize metric parameters based on data analysis

"""

optimization_strategies = {

'cosine': {

'high_dimensionality': {'normalize': True},

'sparse_data': {'normalize': True, 'handle_sparsity': True},

'dense_data': {'normalize': False}

},

'euclidean': {

'high_dimensionality': {'pca_preprocessing': True},

'varying_scales': {'normalize': True},

'uniform_scales': {'normalize': False}

}

}

strategy = optimization_strategies[metric]

params = {}

for characteristic, setting in strategy.items():

if data_characteristics[characteristic]:

params.update(setting)

return paramsConclusion: Building the Future of AI Memory Systems

The field of AI memory is evolving rapidly, with new breakthroughs in embeddings, vector databases, and retrieval techniques emerging constantly. Mastering these technologies is essential for building scalable, efficient, and intelligent AI systems that can truly understand and remember.

Key Takeaways:

- Embeddings are fundamental: They transform qualitative data into quantitative representations that capture semantic meaning

- Vector databases are essential: Specialized infrastructure for efficient storage and retrieval of high-dimensional data

- Semantic search enables understanding: Moves beyond keyword matching to true intent understanding

- RAG systems combine retrieval and generation: Create more accurate and context-aware AI responses

- Optimization is multidimensional: Requires addressing hardware, software, and algorithmic challenges

Future Directions:

- Multimodal embeddings: Unified representations across text, image, audio, and video

- Real-time learning: Continuous updating of embeddings and indices

- Federated learning: Distributed AI memory across edge devices

- Quantum-inspired algorithms: New approaches to high-dimensional similarity search

- Self-optimizing systems: AI that automatically optimizes its own memory management

Additional Resources

Learning Materials

- Vector Databases 101 - Comprehensive beginner's guide

- Advanced Embedding Techniques - Deep dive into embedding models

- RAG System Design - Architectural patterns for RAG

Tools and Frameworks

- LangChain - Framework for AI applications

- Chroma - Open-source vector database

- Sentence Transformers - Embedding models

Research Papers

- Efficient Vector Similarity Search - Latest algorithms

- Memory Optimization in LLMs - Techniques for large models

- Multimodal Embeddings - Cross-modal representations

Communities

- AI Memory Researchers Forum - Academic discussions

- Vector Database Users Group - Practical implementations

- RAG Practitioners Community - Real-world applications

Disclaimer: This guide provides technical information for educational purposes. AI technologies evolve rapidly, and specific implementations may vary. Always validate approaches for your specific use case and consult official documentation for the tools and frameworks you use. Performance characteristics may vary based on hardware, software versions, and specific workloads.

Inspire Others – Share Now

Agentic AI Saksham

India’s Only 1st Ever Offline Hands-on program that adds 4 Global Certificates while making you a real engineer who has built their own AI Agents

EV

Saksham

India’s Only 1st Ever Offline Hands-on program that adds 4 Global Certificates while making you a real engineer who has built their own vehicle

Agentic AI LeadCamp

From AI User to AI Agent Builder — Capabl empowers non-coding professionals to ride the AI wave in just 4 days.

Agentic AI MasterCamp

A complete deployment ready program for Developers, Freelancers & Product Managers to be Agentic AI professionals

Table of Contents

- Introduction: The Memory Challenge in Modern AI

1.1. Evolution of LLMs and Context Limitations

1.2. The Need for Efficient Memory Systems

1.3. From Keyword Matching to Semantic Understanding - The Foundation of AI Memory: Embeddings

2.1. What Are Embeddings?

2.2. How Embeddings Capture Semantic Meaning

2.3. Key Characteristics of Embeddings

2.4. Practical Example: Word Embeddings in Action

2.5. Types of Embeddings and Their Applications - Simulating Memory in AI Systems

3.1. Information Encoding and Retrieval

3.2. Conversational Memory

3.3. Knowledge Retrieval and Personalization - Vector Databases: The Brain of AI Memory

4.1. Why Traditional Databases Fall Short

4.2. Vector Database Architecture

4.3. Indexing and Similarity Search

4.4. Scalability and High-Performance Retrieval - Semantic Search and RAG Systems

5.1. Beyond Keywords: Semantic Search vs. Keyword Search

5.2. How Semantic Search Works

5.3. Implementation of Semantic Search Engines

5.4. RAG Workflow and Advanced Architectures - Choosing and Optimizing Similarity Metrics

6.1. Cosine Similarity vs. Euclidean Distance

6.2. Metric Selection Guidelines

6.3. Optimizing Metric Parameters - Building Intelligent AI Memory Systems

7.1. Combining Embeddings with Vector Databases

7.2. Handling Large-Scale Data

7.3. Reranking and Context Optimization - Optimization and Performance Strategies

8.1. Hardware and Software Optimization

8.2. Indexing and Caching Techniques

8.3. Reducing Latency and Cost - Future of AI Memory Systems

9.1. Multimodal Embeddings

9.2. Real-Time and Federated Learning

9.3. Quantum-Inspired Algorithms

9.4. Self-Optimizing Memory Systems - Conclusion and Key Takeaways

10.1. Why Embeddings and Vectors Matter

10.2. The Shift from Search to Understanding

10.3. Final Thoughts on Scalable AI Memory - Additional Resources

11.1. Learning Materials

11.2. Tools and Frameworks

11.3. Research Papers

11.4. Communities and Forums - Disclaimer