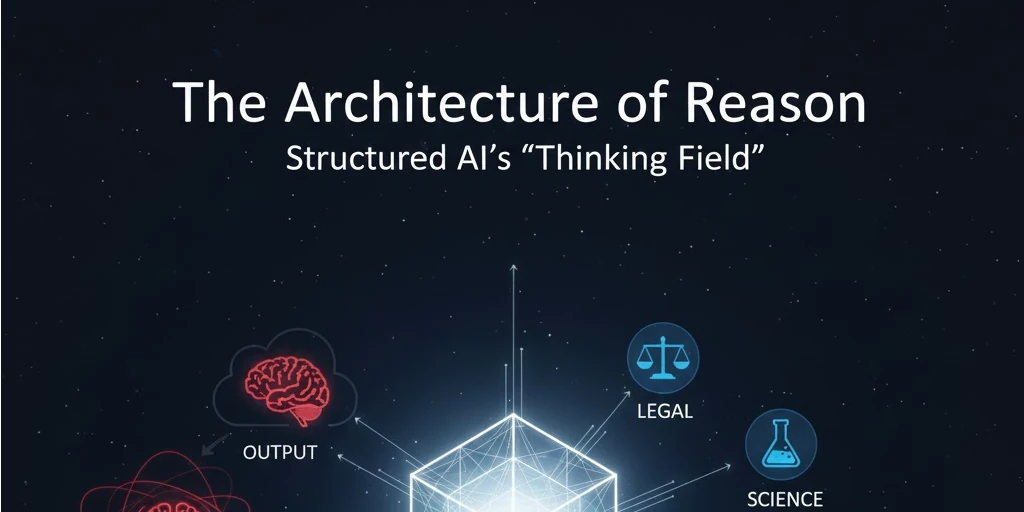

The Architecture of Reason: Why Structured AI Needs a "Thinking Field"

In the rush to build AI agents, most developers focus on the output, the final JSON, the generated code, or the formatted report. However, as we move from simple chatbots to complex autonomous agents, we encounter a fundamental limitation of Large Language Models (LLMs): they are statistically brilliant but logically fragile.

To solve this, we must look toward a technique that is becoming the gold standard for reliable AI: The Thinking Field.

The Theoretical Problem: The Autoregressive Trap

To understand why AI fails at complex tasks, we must understand how it "thinks." LLMs are autoregressive.1 This means they generate text one token at a time, where each new token is based solely on the tokens that came before it.2

The Analogy: Imagine trying to solve a complex math problem, but you are forced to speak your answer out loud, one syllable at a time, without ever being allowed to pause or use a scratchpad. If you start saying "The answer is forty-..." and realize halfway through that the answer is actually fifty, you are stuck. You cannot "backspace" your speech.

When we ask an AI for a structured JSON object (like a project schedule or a financial breakdown), we are forcing it into this trap. If the AI starts writing the start_date for a task before it has fully calculated the dependencies of the entire project, it will likely hallucinate a date just to keep the JSON syntax valid.

The Solution: Externalizing the Latent Space

The "Thinking Field" is a dedicated space within a structured schema (like a Pydantic model or a JSON schema) that forces the model to externalize its internal "latent" reasoning into explicit tokens before it reaches the data fields.

By placing thinking at the top of the schema, we fundamentally change the computational flow of the model.

The Three Theoretical Pillars

1. Dual Process Theory (System 1 vs. System 2)

In his seminal work Thinking, Fast and Slow, Daniel Kahneman describes two systems of thought:3

- System 1: Fast, instinctive, and emotional (Pattern Matching).4

- System 2: Slower, more deliberative, and logical (Reasoning).

Standard LLM prompting often triggers System 1. By mandating a thinking field, we force the model into System 2. It must slow down, decompose the problem, and "talk to itself."

2. The Attention Feedback Loop

From a Transformer architecture perspective, the thinking field acts as a computational scratchpad. Because of the Self-Attention mechanism, every token the model generates for the result field can "attend" to the tokens it just generated in the thinking field.5 The model is no longer just predicting the answer based on your prompt; it is predicting the answer based on its own proven logic.

3. Constraint Satisfaction Problems (CSP)

Most high-value AI tasks are actually Constraint Satisfaction Problems. LLMs struggle with CSPs because constraints are often global, while token prediction is local.

- The Thinking Field allows the model to perform Global Constraint Checking.

- It can list the rules (e.g., "Task A must end before B") and simulate the solution in text before committing to the final data points.

Applications Across Industries

This technique is project-agnostic. If your AI does more than just summarize text, it needs a thinking field.

The Future

Beyond accuracy, the "Thinking Field" solves the Black Box Problem. In a professional setting, "The AI said so" is not an acceptable justification.

By capturing the thinking field, you create an audit trail. You move from Generative AI (which creates things) to Reasoning AI (which solves things). This transparency is the key to user trust and the safe deployment of AI in critical infrastructure.6

Conclusion

The next generation of AI development isn't about bigger models; it's about better architectures.7 By implementing a "Thinking Field," you are giving your agent the one thing it lacks by default: the ability to stop and think before it speaks.

Inspire Others – Share Now

Agentic AI Saksham

India’s Only 1st Ever Offline Hands-on program that adds 4 Global Certificates while making you a real engineer who has built their own AI Agents

EV

Saksham

India’s Only 1st Ever Offline Hands-on program that adds 4 Global Certificates while making you a real engineer who has built their own vehicle

Agentic AI LeadCamp

From AI User to AI Agent Builder — Capabl empowers non-coding professionals to ride the AI wave in just 4 days.

Agentic AI MasterCamp

A complete deployment ready program for Developers, Freelancers & Product Managers to be Agentic AI professionals

Table of Contents

.png)